-

Solutions

- AI Governance

- Geo-Specific Cookie Banner

- Consumer Preference Management

- Data Subject Request Automation

- Data Mapping and Vendor Risk Management

- Privacy, Vendor, and Risk Assessments

- Privacy Program Management

- Regulatory Guidance

- Privacy Program Consulting

- Certifications and Verifications

- International Data Transfers

Forrester TEI ROI of Privacy ReportTrustArc commissioned a Forrester study to analyze the potential benefits of using our platform and the Forrester team found ROI linked to efficiency, compliance, and decreased cost in data breaches.

Read the report -

Products Products Consent & Consumer Rights Overview

Experience automated privacy solutions that simplify compliance, minimize risk, and enhance customer trust across your digital landscape.

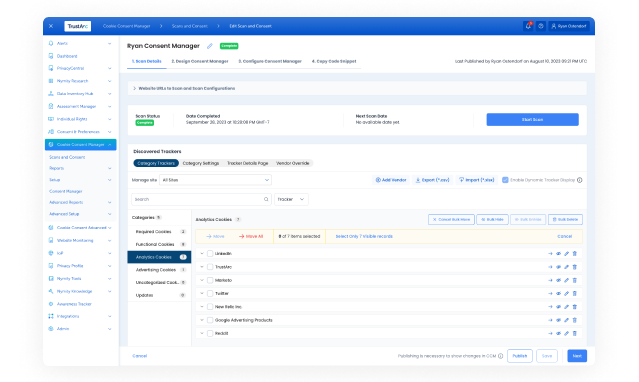

- Cookie Consent Manager Effortlessly manage cookie consent for global compliance, ensuring a secure, personalized browsing experience.

- Consent & Preference Manager Easily manage customer consent across brands and platforms—email, mobile, advertising—with a centralized repository.

- Individual Rights Manager Automate and streamline DSR workflows to ensure compliance and show your commitment to customer rights.

Products Privacy & Data Governance OverviewSimplify privacy management. Stay ahead of regulations. Ensure data governance with cutting-edge solutions.

- PrivacyCentral Centralize privacy tasks, automate your program, and seamlessly align with laws and regulations.

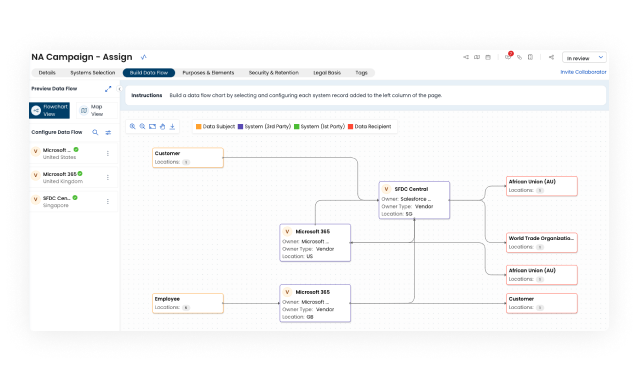

- Data Inventory Hub and Risk Profile Gain full visibility and control of your data and accurately identify and mitigate risks.

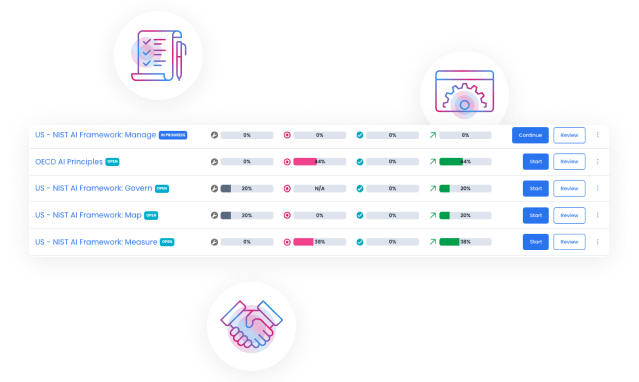

- Assessment Manager Automate and score privacy assessments like PIAs and AI Risk, streamlining your compliance workflow.

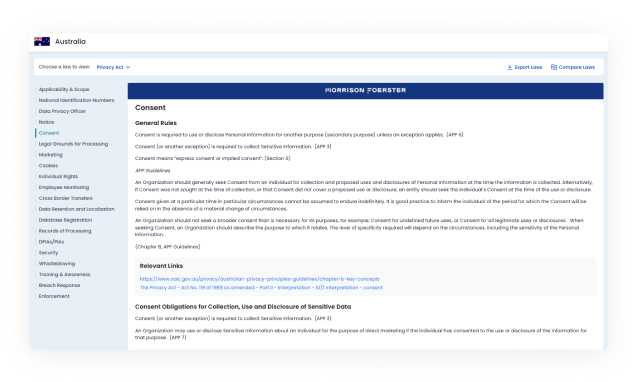

- Nymity Research Get instant access to the latest in privacy regulations, legal summaries, and operational templates.

Products Assurance & Certifications OverviewBoost brand trust with TRUSTe's certifications, showing your privacy commitment with the most recognizable seal, assessed by unbiased experts.

- Dispute Resolution

- TRUSTe Enterprise Privacy Certification

- TRUSTe EDAA Privacy Certification

- TRUSTe APEC CBPR and PRP Certification

- TRUSTe Data Collection Certification

- CCPA/CPRA Validation

- Data Privacy Framework Verification

- GDPR Validation

- Digital Advertising Alliance Validation

- Responsible AI Certification

-

Regulations

- EU General Data Protection Regulation (GDPR)

- California Consumer Privacy Act (CCPA)

- Virginia Consumer Data Protection Act (CDPA)

- NIST AI Framework

- ISO/IEC 27001

Data Privacy Framework (DPF)Transatlantic data transfer mechanism for EU-U.S., UK, and Swiss-U.S. commerce.

Learn more - Resources

- Contact us

Main Menu

Privacy & Data Governance

Navigate, automate, and certify your compliance

Trusted globally by 1500 companies and counting

The numbers don't lie, create real ROI

-

Achieve cost savings

35% decrease in total cost of proving compliance

-

Accelerate compliance

5 week decrease in time to compliance

-

Reduce risk

$654k reduced cost of complying with privacy laws

-

Avoid privacy incidents

80% decrease in privacy incidents when using TrustArc products

Privacy compliance, custom-crafted for your business

Forge a global privacy program that elevates compliance through automation and strengthens customer trust.

-

Empower trust in AI Protect your AI’s future by focusing on privacy and data governance. Our solutions promote ethical AI with strong data management, enhancing transparency and accountability.

-

Streamline your privacy workflow Simplify your privacy operations with automation, making complex tasks seamless—from data mapping to risk assessments.

-

Automate global cookie and tracker management Configure for effortless global compliance with your cookies and trackers, including disclosures and consents.

-

Navigate regulations with confidence Stay ahead of evolving privacy laws. Access regulatory insights, Morrison Foerster legal summaries, and 800+ operational templates for efficient and confident compliance.

Navigate the future of privacy confidently

TrustArc provides elite compliance solutions, trust-building certifications, and data governance. Streamline your operations and make privacy your differentiator.

eBook

Accountable AI

Get your go-to guide for mastering Accountable AI in privacy. Dive into the world of AI and privacy regulations while discovering a practical roadmap to align your organizational needs with individual rights.